Match.com’s matching algorithm is ridiculously complex, to the point where they are mashing up quiz answers with behavioral data, and now they are experimenting with facial recognition. Excellent to see them working on facial recognition. I brought that up when I visited the Match mothership a few years ago. Back then I was working with Sobayli, which offered basically any type of pattern matching in images. But they were too startup-y in that they wanted to immediately work with EMC and large data storage companies. I brought them to iDate and…crickets. Sobayli offered image and pattern recognition five years ago, we pitched it to Match, and here Match is finally going for it, good for them.

In addition to asking each member anywhere from 15 to 100 questions, the company weeds through the essays they fill out about what they want and gives points to each user based on each parameter in the system — from education and the vocabulary they use, to hair color and religion. People with a similar amount of points, which are weighted on certain areas, have a greater chance of being compatible.

“We also take historical data into account, as well as distance — people in Dallas are more inclined to date someone far away than someone in Manhattan, who might not want to date someone who lives in Queens,” Thombre said.

The site also looks at what people say they want in a partner and who they are actually pursuing on the site.

“People have a check list of what they want, but if you look at who they are talking to, they break their own rules. They might list ‘money’ as an important quality in a partner, but then we see them messaging all the artists and guitar players,” he said.

Match.com also sends matches based on this behavior: “Similar to Netflix or Amazon, we know that if you liked one person, you might like another that is similar,” Thombre said. “But of course it is different here. Carlito’s Way may be your favorite movie, but in this case, he has to like you back for it to be a match.”

Moving forward, Thombre says Match.com wants to experiment with facial recognition technology via the site.

So Match now asks up to 1/2 as many questions as eHarmony. Having Match watch me react to initial views of dating profiles is both exhilarating and spooky. They really need to create a beta site so a subset of users can participate in the trials instead of limiting to San Diego or Phoenix or wherever they test their stuff.

HowAboutWe took a different track.

“We actually launched HowAboutWe with a robust algorithm, which we subsequently got rid of after realizing that we had put the cart far, far before the horse,” Schildkrout said. “It’s only after you achieve significant liquidity in a market that you can build a useful algorithm.”

Build the framework and make it easy to evolve. they had little idea about what they were doing so early on, but you can make lots of mistakes when you have $20 million in the bank.

Read How Online Dating Sites Use Data to Find ‘The One’.

Here’s what some other companies are doing with facial recognition.

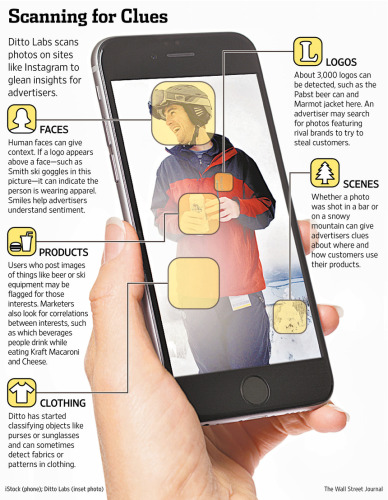

Some companies, such as Ditto Labs Inc., use software to scan photos—the image of someone holding a Coca-Cola can, for example—to identify logos, whether the person in the image is smiling, and the scene’s context. The data allow marketers to send targeted ads or conduct market research. Read Smile marketing firms are mining your selfies.

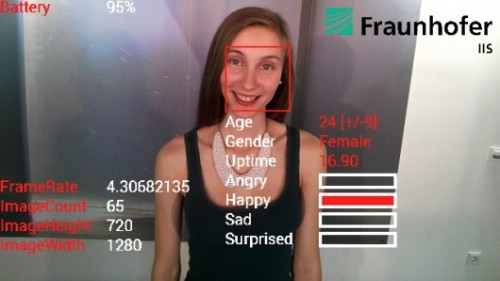

Over a number of years, researchers at Germany’s Fraunhofer Institute have developed software to measure human emotion through face detection and analysis. Dubbed SHORE (Sophisticated High-speed Object Recognition), the technology has the potential to aid communication for those with disabilities. Now the team has repurposed the software as an app for Google Glass, with a view to bringing its emotion-detecting technology to the world.

This could be very cool for dating sites. Let the camera read your reaction to viewing profiles and tweak the algorithm accordingly. Read Fraunhofer’s Google Glass app detects human emotions in real time.

Researchers at Ohio State University found that humans are capable of reliably recognizing more than 20 facial expressions and corresponding emotional states.

Perceiving whether someone is sad, happy, or angry by the way he turns up his nose or knits his brow comes naturally to humans. Most of us are good at reading faces. Really good, it turns out.

So what happens when computers catch up to us? Recent advances in facial recognition technology could give anyone sporting a future iteration of Google Glass the ability to detect inconsistencies between what someone says (in words) and what that person says (with a facial expression). Technology is surpassing our ability to discern such nuances.

Scientists long believed humans could distinguish six basic emotions: happiness, sadness, fear, anger, surprise, and disgust. But earlier this year, researchers at Ohio State University found that humans are capable of reliably recognizing more than 20 facial expressions and corresponding emotional states—including a vast array of compound emotions like “happy surprise” or “angry fear.”

Read Computers Are Getting Better Than Humans at Facial Recognition.